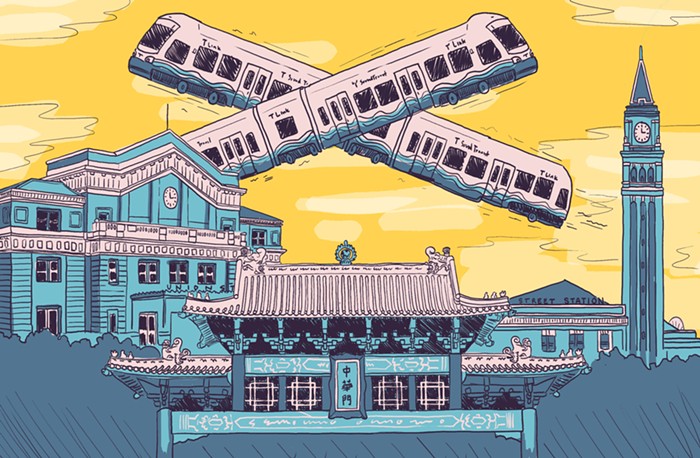

Not too long ago, I walked across the fly-over section of Vancouver BC's West Georgia Street. My destination was a Spanish restaurant on Main Street called Bodega on Main—its food is respectable and its table covers ugly, in a charming way. Twenty minutes before, I had been in a hotel room with a view of the library designed by the great uncle of the Safdie brothers (Uncut Gems, Good Time), architect Moshe Safdie. Before crossing the overpass, which took about 15 minutes, I saw nine trains going to and from the downtown area. This is the kind of frequency that changes the character of a city's transit system. "More is different," the American physicist P.W. Anderson maintained in a brief but influential 1972 paper. Meaning, two systems with the same elements are radically different if one has a much larger number of those elements. This is the basis of emergency theory. Seattle's light rail system is not at all like the one in Vancouver, the SkyTrain. Here, platform-waiting is hard to avoid; there, a train has either just left or is about to come.

The SkyTrain is also fully automated. And this, it turns out, is the substance of this post: Automation isn't always a bad thing.

Fatalities, injuries, massive system delays to thousands of people, and busted trains will continue because of MLK Way until City of Seattle government chooses to radically tame the de facto highway into a street. https://t.co/gA1No0ZKod

— The Urbanist (@UrbanistOrg) January 31, 2024

What we've learned since Link opened in 2009 is that trains need to be elevated or in the ground. That's the only way to avoid the state-supported stupidity of cars. And if our system, which still has only one line, was built in this rational way, there would be no bad encounters with street-level traffic and no need for drivers. The trains could do the job by themselves.

But what does it mean for automation to be an obviously bad thing for workers in general but a good thing for public use? Can we learn from this clear functional difference? Self-acting machines that keep labor costs down and wage earners disciplined are ontologically not the same as those that, without a question, are optimal in the wider social (and therefore democratic) sense. An ontological distinction also exists between the fantasy of self-driving cars and the reality of automated trains.

Billions of dollars have been spent trying and failing to achieve something I do every morning with a smartphone and a train https://t.co/EXM5Ern3PI

— the prince with a thousand enemies ♂️ (@jaketropolis) February 4, 2024

Though the dream of fully automating the automobile is still far from realization ("GM’s Reuss Hopes Cruise Will Regain Trust In ‘Four To Five’ Years"), capitalists, with financial and political encouragement from the state, have poured history-making resources into them. But people robots are already here. Indeed, fully automated trains have been with us in a meaningful way since the 1980s. (Seattle also has an excellent and punctual automated line, but it is short and only serves the SeaTac International Airport.) And what will self-driving cars never become? As safe and effective as the SkyTrain. These people-moving machines arrive on time, are fast, and rarely make mistakes.

Also, the anxiety caused by robots that only concentrate wealth in the hands of a few property owners is absent when it comes to robots that serve, in the main, those with little to no property. We do not fear that automated trains will take over the world or have some secret agenda nefariously growing and glowing in their circuitry. We trust these robots. They are our comrades. Can this feeling of confidence be attributed to what some theorize will be the end of capitalism and the next level of economic reality, fully automated luxury communism? (I will leave that question for another post.)

What does all of this mean? What am I trying to get at? The answer might be partially found in this key passage in Donna Haraway's groundbreaking A Cyborg Manifesto:

The main trouble with cyborgs, of course, is that they are the illegitimate offspring of militarism and patriarchal capitalism, not to mention [formal] state socialism. But illegitimate offsprings are often exceedingly unfaithful to their origins. Their fathers, after all, are inessential.

True that. But most of the machines in the market system can never be anything else than faithful to their origins. The automobile is obviously such a machine (self-driving or not). Why? Because it has too much fantasy in it to be useful (or disenchanted) in the widest possible sense. This is why traffic engineering can never be, in essence, a technical matter. It can only be cultural, like conducting a Sunday service or refereeing a Monday night football match. This is why AI or other smart technologies are condemned to continue car culture as it is: traffic, more traffic, endless traffic. Technical solutions, as defined by the AI researcher Dr. Joy Buolamwini in her book Unmasking AI, will never reach and penetrate the phantasmagoria of a Ford F-150. When it comes to this and other massive automobiles, prayers and spells are just as effective as computer programs and processing systems. A train, however, is accessible to the social world, which is not the same as the cultural one. It is, for the masters of our dominant culture (capitalism), the worst kind of machine because it's not only unfaithful to its origins but its problems and challenges are mostly technical.

I think about this every time I'm on Vancouver's SkyTrain or SeaTac's SEA Underground or JFK's AirTrain.

Dr. Joy Buolamwini will discuss her book with Charles Mudede at Town Hall on Sunday, February 18.